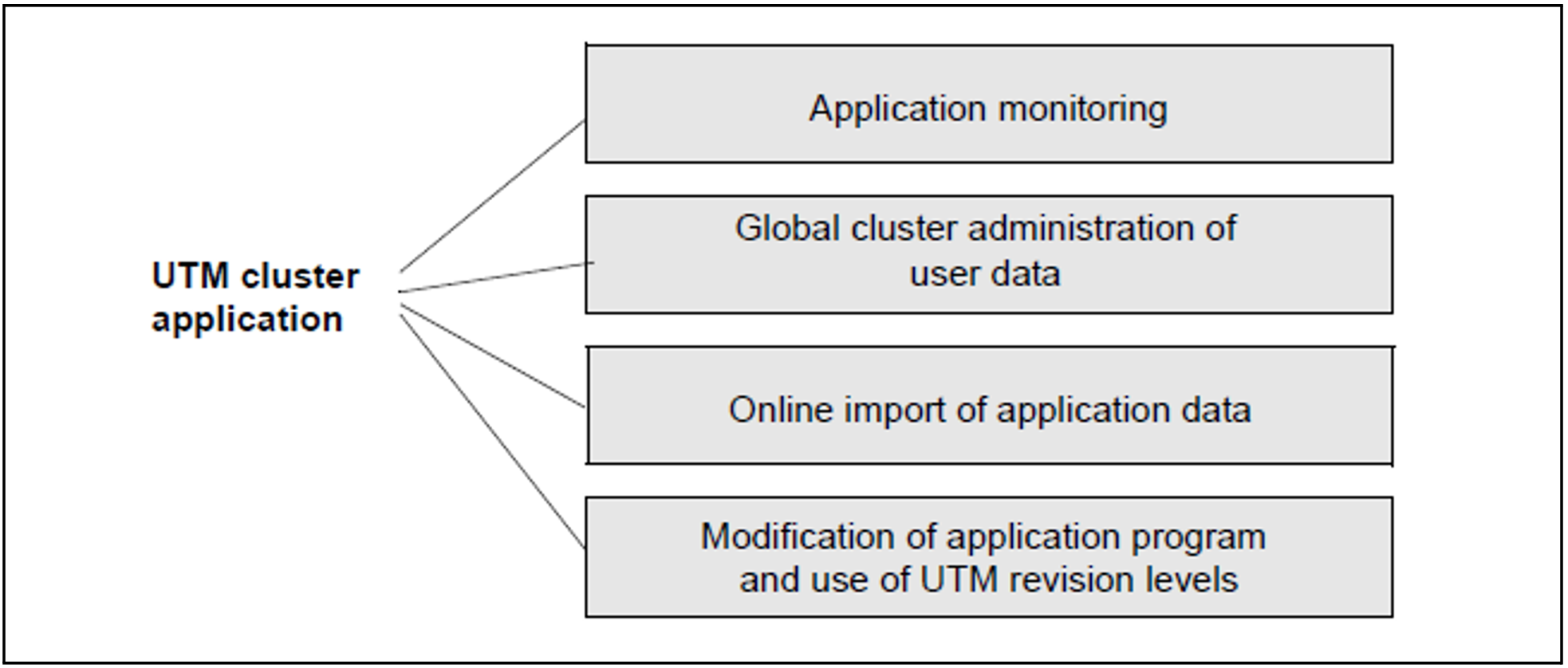

The figure below provides an overview of the central high availability functions provided by a UTM cluster application::

Figure 37: Central high availability functions of a UTM cluster application

Application monitoring and measures on failure detection

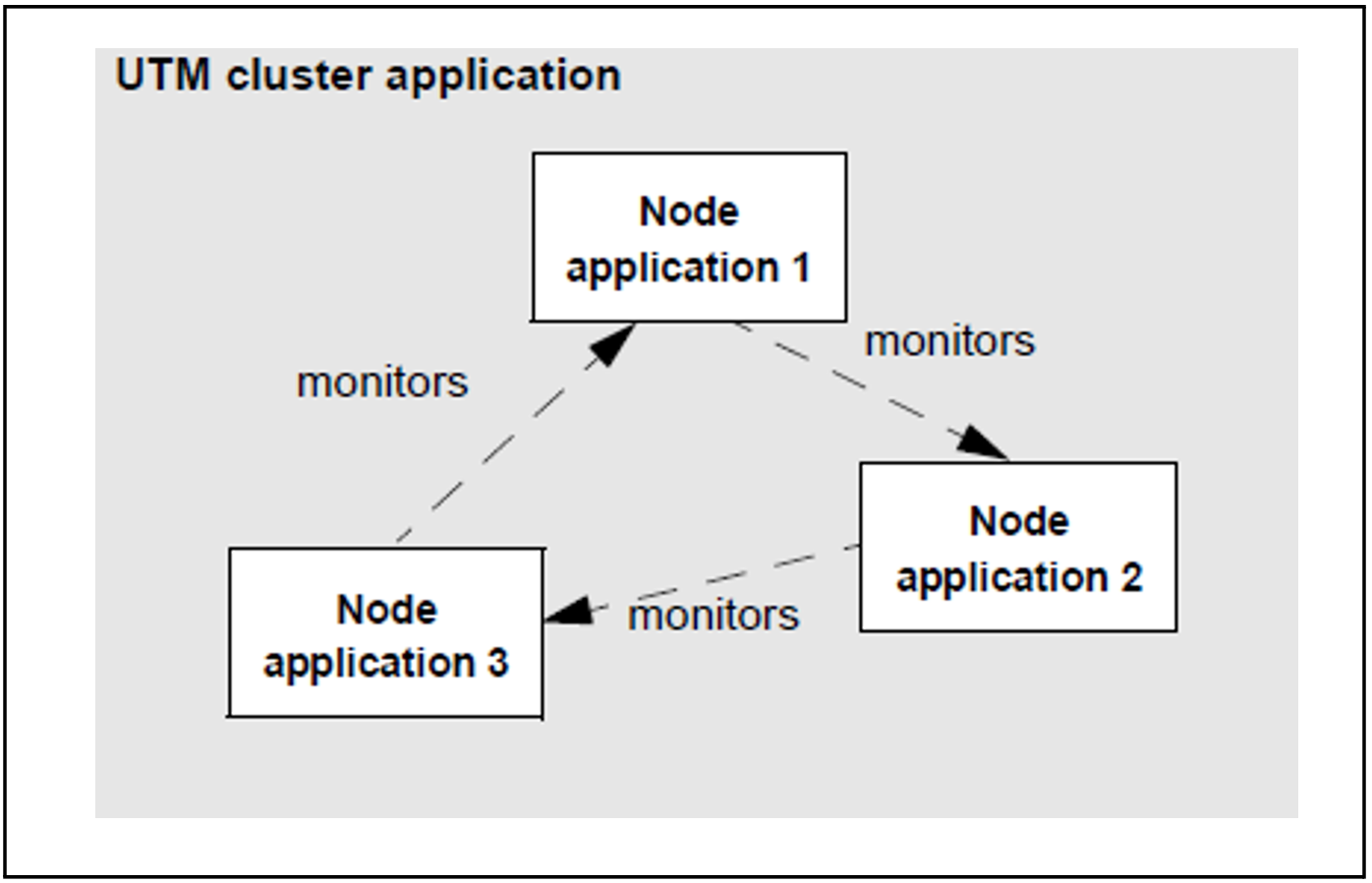

It is possible to monitor UTM cluster applications without the need for additional software. The node applications monitor each other. If a node application fails, it is possible to trigger follow-up actions.

Figure 38: Circular monitoring between three node applications

When a node application is started, it is decided dynamically what other node application is to be monitored by this note application and what other node application monitors this node application. These monitoring relationships are entered in the cluster configuration file. When the application is terminated, the relationships are canceled. After a monitoring relationship has been set up, the existence of the monitored node application is checked at a specified interval that can be generated.

The availability of a node application is monitored within a two-stage process in which, at any time, the next stage is initiated if the stage that precedes it cannot exclude the possibility that the monitored application has failed.

- The first stage in this monitoring process is performed through the exchange of messages.

The second stage consists of an attempt to access the KDCFILE of the monitored application in order to check whether the monitored application is still active.

In the event that failure of a node application is detected, you can use a procedure or script to initiate follow-up actions. The content of the procedure or script depends on the actions and the platform on which the node applications are running and is defined by the user.

For more detailed information on application monitoring in the cluster, see the openUTM manual “Using UTM Applications on Unix, Linux and Windows Systems” |

Global administration of application data in the cluster

Some application data such as the global memory areas GSSB and ULS and the service-specific data relating to users is administered at global cluster level. This means:

GSSB and ULS can be used globally in the cluster. As a result, the current contents of GSSB and ULS are always available in every node application. Changes made to these areas by a node application are immediately visible in all the other node applications.

Service restarts are node-independent. Following the normal termination of a node application, users can continue an open dialog service at another node application provided that the service in question is not a node bound service. Such users can continue working without delay and do not have to wait until the terminated node application becomes available again.

The application data is administered in UTM cluster files which can be accessed by all the node applications. A brief description of these files can be found in the section "UTM cluster files".

For more detailed information on UTM cluster files in UTM cluster applications, see the openUTM manual “Using UTM Applications on Unix, Linux and Windows Systems”. |

Special characteristics of the LU.6.1 link

The LU6.1 protocol uses so-called sessions to perform communication. The sessions have two-part names that must be known at both partners. The session name is therefore nodespecific. Since all node applications are generated in exactly the same way in UTM cluster applications, all the session names are present in a node application, including the session names used for the other node applications. To permit the UTM application to select an appropriate session when establishing a connection to a partner application, the logical name of the node application must also be assigned to each of the sessions on generation.

For more detailed information on generating an LU6.1 link for a UTM cluster application, see openUTM manual “Generating Applications” under "KDCDEF control statements - sections CLUSTER-NODE and LSES" as well as under "Distributed processing via the LU6.1 protocol". |

Online import of application data

Following the normal termination of a node application, another running node application can import messages to (OSI) LPAPs, LTERMs, asynchronous TACs or TAC queues and open asynchronous conversations from the terminated node application. The imported data is deleted in the terminated node application.

Online imports must be initiated at administration level.

For more detailed information on the online import of application data in the cluster, refer to openUTM manual “Using UTM Applications on Unix, Linux and Windows Systems”. |

Online application update

The use of UTM cluster applications permits genuine 7x24 operation. The ability to modify the (static) configuration is an important aspect of high availability.

It is possible, while a UTM cluster application is running, to start up a new version of the application program, make changes to the configuration or deploy a new UTM revision level.

The operations necessary to do this are described below.

New application program

If you want to add new application programs to a UTM cluster application or modify existing programs, you do not have to shut down the entire UTM cluster application to do so.

In addition to the possibilities for changing programs during operation – for example, by adding programs at the administration level, rebinding the load module, exchanging the load module – it is also possible to make changes to the application program during operation. When you do this, you must briefly shut down the corresponding node applications in sequence. For example, in this way you can add a new load module to the application or add programs in excess of the reserved space available (see openUTM manual “Generating Applications”, RESERVE statement).

After you have added the application program, you can restart the relevant node application with the new application program.

New configuration

If you want to make changes to the configuration of a UTM cluster application that are not possible using the dynamic administration capabilities, it is not always necessary to shut down the entire UTM cluster application.

To do this, you must regenerate your UTM cluster application. After generation, you must take over the KDCFILE for each node application.

For a detailed description of the procedure and the actions required for the individual node applications, refer to the openUTM manual “Using UTM Applications on Unix, Linux and Windows Systems”. |

UTM revision levels

You can deploy UTM revision levels during system operation, i.e. some of the node applications can continue to run while the revision level is being implemented in the other node applications.

To do this, you must shut down the node applications one after the other and then restart them with the new revision level.

For more detailed information on the online updating of UTM cluster applications, refer to the openUTM manual “Using UTM Applications on Unix, Linux and Windows Systems” |

Node recovery on another node computer

If a node application terminates abnormally due to a computer failure and cannot be restarted rapidly on the failed computer then node recovery can be performed for this node application on any other node computer in the UTM cluster.

This makes it possible to eliminate the consequences of the abnormal termination of a node application, for example by releasing the ULS and GSSB memory areas that are global to the cluster and are locked on the abnormal termination of the node application or by signing off users who were signed on exclusively at the node application at the time of termination.

Following node recovery, node or cluster updates and online import operations are possible again.

openUTM supports node recoveries even when interacting with databases. For this to be possible, the associated database must provide the required functions. For details, see the openUTM Release Notice.

For more detailed information on the prerequisites for and configuration of node recovery operations, see the openUTM manual “Using UTM Applications on Unix, Linux and Windows Systems” |